Thirty-five percent of global companies now utilize some form of artificial intelligence (AI) in their businesses, while 42 percent claim to be exploring AI within their company, according to an Exploding Topics trend analysis. Although the recent surge of generative AI brings with it many benefits and assets, there are also inherent risks.

As an increasing number of organizations continue to integrate AI into their processes, having a plan to mitigate risks, such as data privacy breaches, biased programming, loss of control or transparency, is vital.

Identify and Protecting Against AI Cybersecurity Risks

Generative AI (gen AI), or AI that creates content such as text, audio, images and other types of data, is an incredibly useful tool for businesses across a variety of industries. Your organization may already be utilizing such tools such as ChatGPT, or implementing large language models (LLMs), to aid with content creation and output.

Generative AI and LLMs will continue to disrupt and transform our work processes, but deploying these technologies involves several risks, such as:

- Publication of incorrect or fictitious information: AI can generate content that is misleading or entirely false.

- Prompt injection attacks: Malicious inputs can manipulate the AI’s responses or behavior.

- Data breaches, leakage or exfiltration: Sensitive information can be exposed, compromising privacy and confidentiality.

- Data bias, manipulation and discrimination: AI models can perpetuate existing biases and unfairly favor certain groups over others.

- Model infection, evasion and poisoning: Adversarial attacks can corrupt or exploit the AI model to behave undesirably.

- Inaccurate generated results, including drift and hallucination: AI software may produce results that are factually incorrect or deviate over time.

- Legal, compliance and ethical violations: AI-generated content can inadvertently break laws or ethical standards.

- Denial of service attacks: Malicious users can overload the AI system, rendering it inoperable.

- Less rigorous testing standards and best practices: The rapid deployment of AI may bypass thorough testing and validation processes.

- Denial of service attacks: Lack of accountability, transparency or explainability in rendering results, which can be difficult to trace and understand how AI models arrive at their conclusions.

Generative AI and LLMs hold great promise for transforming work processes but come with significant risks. Overall, these potential threats highlight the importance of careful risk management and oversight in the deployment of gen AI.

Additionally, due to their unique nature, knowing how or what to test with AI systems can be difficult and lead to challenges in implementing the appropriate standards, safeguards and best practices.

Ways To Mitigate Risk

To mitigate the risks associated with generative AI and LLMs, organizations should establish a comprehensive AI risk management governance program that includes acceptable use policies, risk assessments and robust data and security management practices. Implementing defense in depth and adhering to best cybersecurity practices is crucial. Ensuring model security through techniques such as model segmentation, input data validation, anomaly detection, and secure software development lifecycle (SDLC) practices, as well as integrating third-party risk management (TPRM), is essential. Protecting data within the model involves:

- Setting boundaries

- Employing storage and transport encryption

- Enforcing access control and de-identification

- Implanting data loss prevention (DLP) methods

- Ensuring prompt whitelisting

Organizations should establish benchmarks and baselines for continuous evaluation, enhance and expand the maturity of their security stack, including design, automate, and rigorously test threat detection and incident response measures.

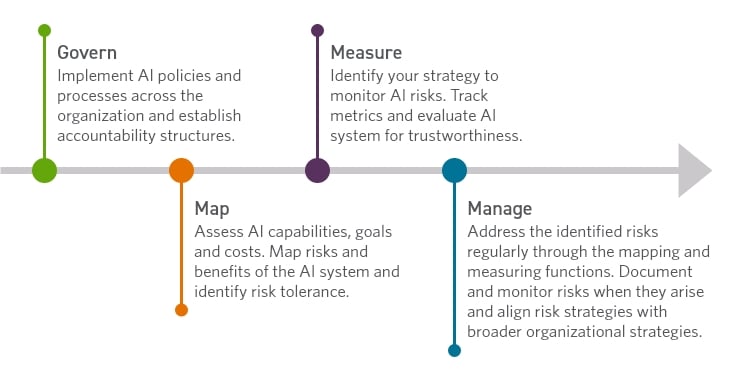

Due to the relative newness of the AI landscape, strategies to best measure and manage cybersecurity risks are continually evolving. The National Institute of Standards and Technology (NIST) recently developed the Artificial Intelligence Risk Management Framework (AI RMF) to help businesses better mitigate AI risks. The NIST AI RMF outlines four steps to establish trustworthy AI in your organization:

To begin the process of risk mitigation, your organization can assess its current AI capabilities and strategies. Once you have established a baseline, you can begin to apply the NIST framework.

With a risk mitigation framework in place, your business can work toward crafting a trustworthy generative AI system. Characteristics of a trustworthy system include:

- Safe and reliable

- Secure data and enhanced privacy

- Explainable and interpretable

- Fair training data with no harmful bias

As the AI footprint rapidly expands across the globe, having an artificial intelligence cybersecurity strategy is imperative. Proactive risk management will ensure your organization has an efficient baseline to work form as regulations and frameworks continue to evolve.

How Cherry Bekaert Can Help

Cherry Bekaert’s Information Assurance & Cybersecurity practice offers a range of cybersecurity services to help protect your information systems and data from cyber threats. Our team can help you effectively manage the risks associated with AI solutions. We can aid in creating a comprehensive AI risk management blueprint and implementation strategy. Our professionals can assist with:

- Establishing a governing body to set appropriate AI guardrails and best practices

- Conducting thorough risk assessments and aligning AI initiatives with specific risk management priorities

- Implementing robust data and security management practices to mitigate potential threats

- Identifying and securing critical functions and processes where AI can add value while managing associated risks

- Developing continuous evaluation benchmarks and enhancing security measures to ensure ongoing protection

By partnering with Cherry Bekaert, you can confidently deploy AI solutions that are secure, compliant and aligned with your risk management objectives. The future of product and service development hinges on the intelligent leverage of AI, and we are ready to guide our clients forward through this evolving landscape.